Apple AI has a ‘creepy’ hidden folder of women’s brassiere photos

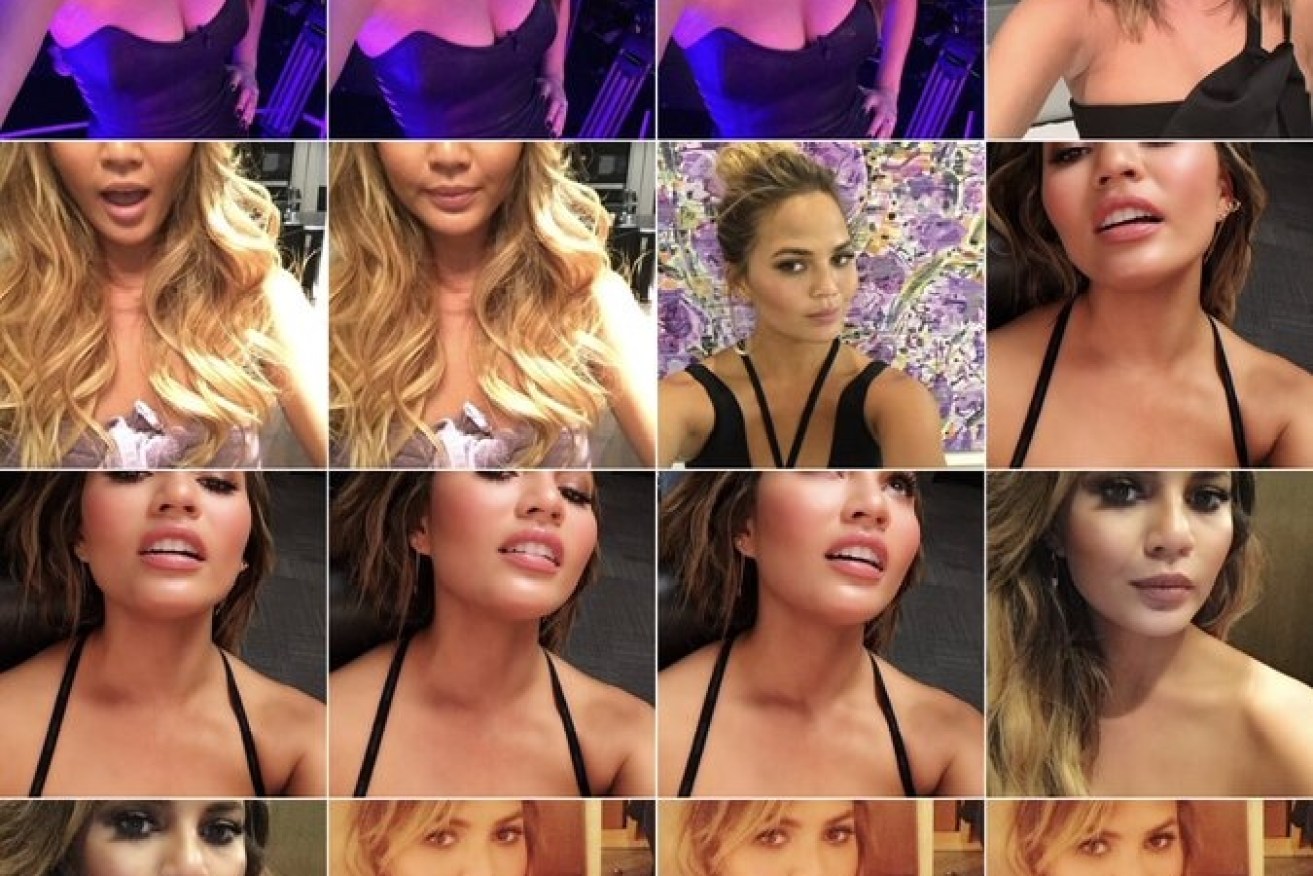

Chrissy Teigen has warned female iPhone users that Apple is categorising photos of their cleavage. Photo: Twitter/Chrissy Teigen

Apple AI image recognition technology, which allows iPhone users to search their photos using certain terms, has a “brassiere” category specifically designed to identify images featuring bras, it has been revealed.

US model Chrissy Teigen this week put iPhone rumours to rest when she posted a screenshot of her own phone’s search results after typing in the word ‘brassiere’ into Apple’s ‘Photos’ app.

The search term promptly located all of Teigen’s photos in which she was wearing low-cut dresses or tops revealing cleavage.

“It’s true. If you type ‘brassiere’ in the search of your iPhotos, it has a category for every boob or cleavage pic you’ve ever taken. Why?” she tweeted.

“Typing ‘food’ will get you ‘food’, but ‘penis’ won’t get you ‘penis’ and ‘boobs’ won’t get you ‘boobs’. Just brassiere. *Strokes beard*.”

Apple refused to provide The New Daily a comment on the record regarding its creation of the ‘brassiere’ category, despite repeated requests for a statement.

It's true. If u type in "brassiere" in the search of your iphotos, it has a category for every boob or cleavage pic you've ever taken. Why. pic.twitter.com/KWWmJoRneJ

— chrissy teigen (@chrissyteigen) October 31, 2017

Instead, Apple directed TND to the company’s ‘approach to privacy’ page on its website.

“Apple harnesses machine learning to enhance your experience – and your privacy,” it reads.

“We’ve used it to enable image and scene recognition in Photos, predictive text in keyboards and more.

” … Apps can analyse user sentiment, classify scenes, translate text, recognise handwriting, predict text, tag music and more without putting your privacy at risk.”

But among the thousands of search terms incorporated into the Apple AI technology to assist in the identification of common objects and scenes such as ‘food’, ‘garden’ and ‘dog’, the inclusion of ‘brassiere’ stands apart as a “strange” decision.

Swinburne University of Technology’s Philip Branch, an associate professor with research interests in security and machine learning, said he found it difficult to understand why Apple’s AI had created the ‘brassiere’ category.

“As noted by others, the clothing categories are quite limited. There’s socks, shoes but no trousers or underwear, for example. Why brassieres?” he said.

“Apple claims it doesn’t sync these folders with other devices and the folder is only created when the search term is invoked, so the risk is not as bad as it could be.

“Nevertheless, it is hard to see what possible benefit this category provides. But I can imagine that it could have some risk if someone breaks into the owner’s phone and wants to obtain personal pictures quickly.”

if u search thru ur photos on iphone n type “brassiere”, it’ll show you which pics that show your cleavage 😱😱 im freaked out

— tiff (@tiffffff_) October 31, 2017

Look up brassiere on your photos if you have an iPhone all your nudes should show up. technology scaring me.

— Djpuke (@ianisseein) October 31, 2017

Monash University’s Robert Merkel, a lecturer in software engineering, said he suspected human interference.

“It sounds like someone at Apple, somewhere along the line, has decided to list brassiere as a category for some reason,” he said.

“I can understand why some people find this creepy or unnerving.”

Mr Merkel said women should feel reassured that in this instance, the phone stores the information locally so there is no direct privacy concern.

A list of the thousands of search terms categorised by Apple AI, including ‘brassiere’, can be found here.