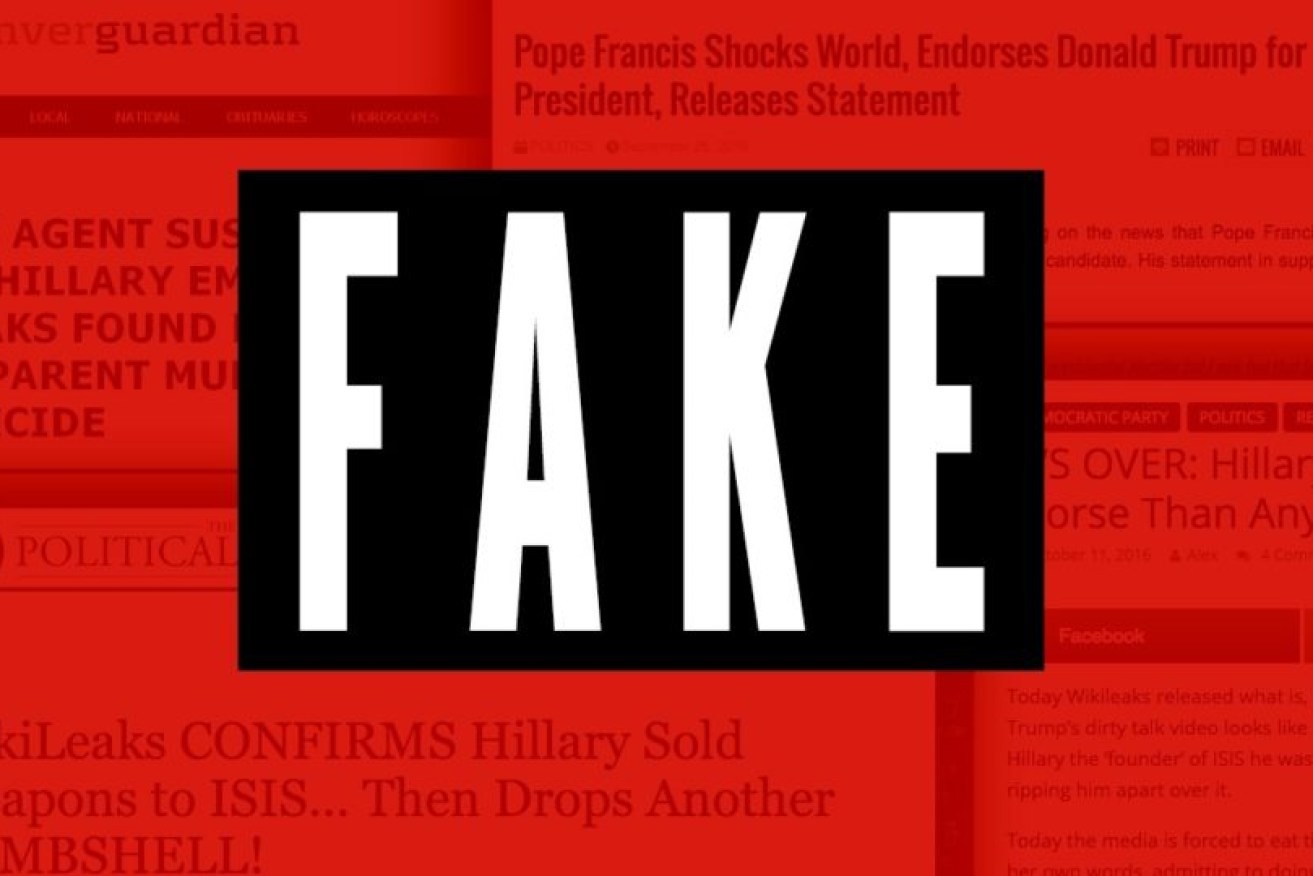

Facebook to warn users about ‘fake news’ with new flagging tool

Disputed content shared on Facebook will come with a 'fake news' disclaimer.

Facebook has begun rolling out a new tool that will warn users when their friends share “disputed content” that could be fake news.

A warning label will soon appear below stories shared via Facebook which are perceived to be a hoax, alerting users to view the content with a degree of scepticism, Gizmodo reported.

This rollout follows widespread criticism over the social media platform playing a role in spreading misinformation during the US federal election in 2016.

The company alluded to the rollout of the new feature in December last year as a means of filtering out “fake news”.

Facebook’s tool involves independent third-party fact checking organisations identifying and flagging stories as “fake”.

Each organisation must be a signatory of Poynter’s International Fact Checking Code of Principles in order to qualify for the role.

Once blacklisted, the content will be flagged with a warning banner indicating to readers that the content is disputed.

There will be a link to the corresponding article explaining why the story should not be trusted.

Stories that have been disputed may also appear lower in News Feed and cannot appear as promoted content.

Facebook users have been encouraged to report suspicious content so that it may be reviewed by participating third party organisations such as Snopes and Politifact.

Facebook research has found that much disputed content masquerading as news is financially motivated.

Often spammers make money from posing as well-known news organisations tricking users into visiting their sites which usually divert to an advertisement, according to Facebook.

While flagged stories can still be shared on Facebook, users will receive an alert before the post is published stating:

“Before you share this story, you might want to know that independent fact-checkers disputed its accuracy.”

Facebook will add ‘fake news’ warning labels to disputed content.